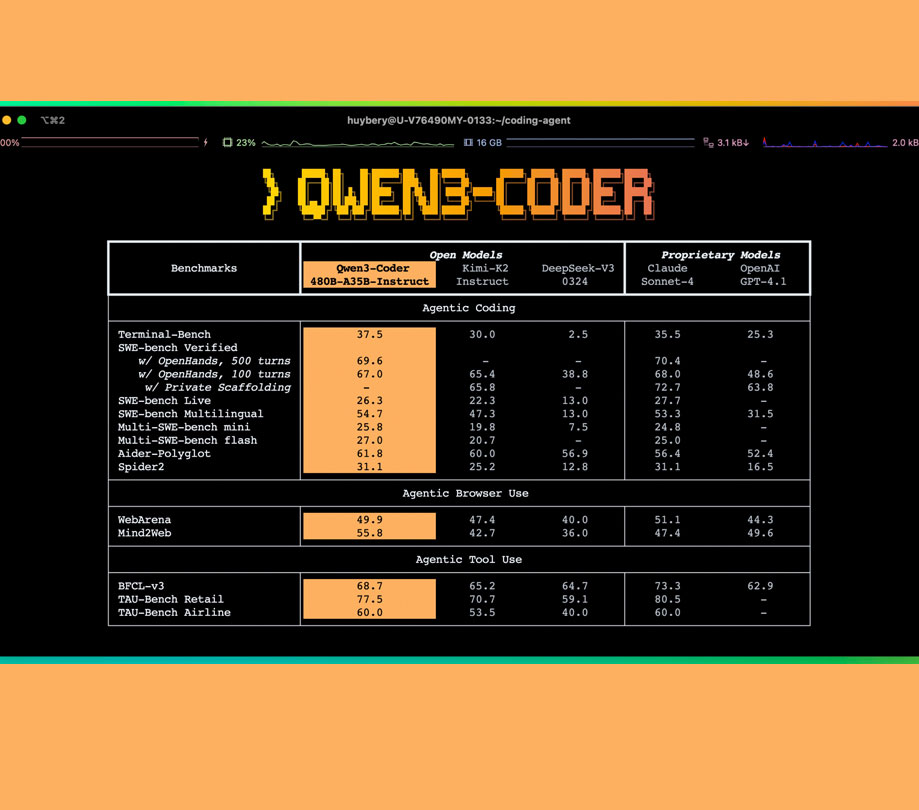

"Qwen3-Coder is Alibaba's most powerful code model to date, featuring a 480B-parameter Mixture-of-Experts architecture with advanced capabilities in agentic coding and tool integration."

"The model is open-sourced with Qwen Code, a CLI tool optimized for enhancing coding efficiency using 7.5 trillion tokens for training, 70% of which are code."

"Post-training enhancements utilize advanced reinforcement learning techniques for improved code execution and complex task performance, supported by extensive cloud infrastructure."

"Alibaba is committed to ongoing development of Qwen3-Coder, focusing on self-improving coding agents aimed at reducing human workload in software engineering."

Alibaba has unveiled Qwen3-Coder, a powerful code model featuring 480 billion parameters and 35 billion active parameters. It is designed for agentic coding and browser use, achieving top-tier performance metrics. The model supports a 256K token context natively and can extrapolate up to 1 million tokens. Along with Qwen3-Coder, Alibaba is releasing Qwen Code, a CLI tool for code optimization, and has prioritized expansive training using 7.5 trillion tokens, of which 70% comprise code. Continuous enhancements focus on advanced reinforcement learning to improve coding agents.

Read at App Developer Magazine

Unable to calculate read time

Collection

[

|

...

]