"Are you confident? I don't think this question is as simple as it once was. As artificial intelligence (AI) increasingly enters our cognitive world, I've been thinking about a new kind of confidence. It arrives quickly, often wrapped in language that sounds both finished and assured. Sometimes, perhaps often, it reads better than anything we might have written. And yet it leaves behind a curious feeling, a sense that something essential was bypassed."

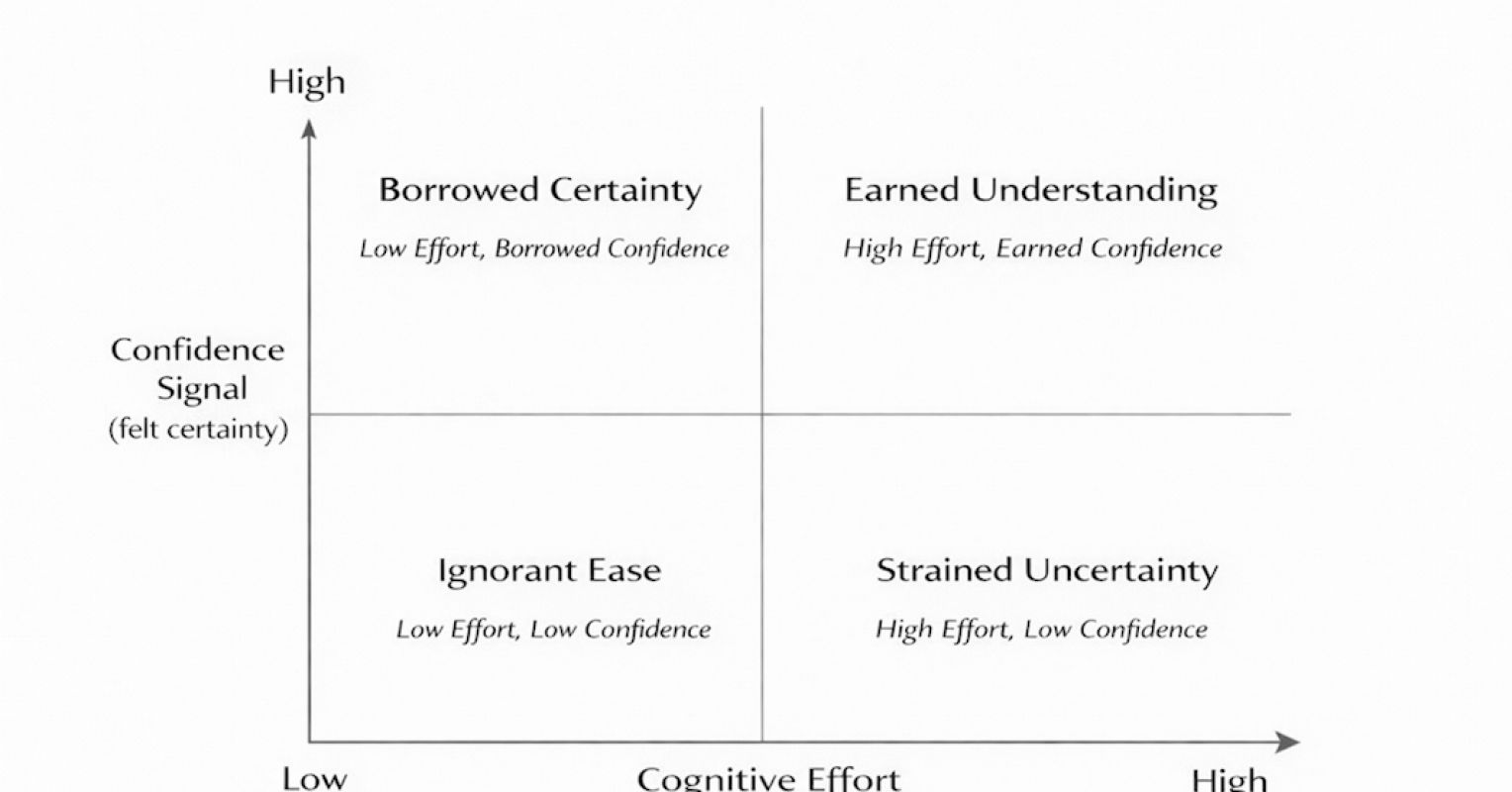

"You ask an AI a serious question. Seconds later, you have an answer that feels solid and even resolved. Do with it what you wish-send it, post it, or act on it. The experience is efficient and oddly satisfying. Still, for many, including me, there's something rather uneasy about it. The answer is good, and the confidence feels real. But the ownership feels uncertain. That unease is not a bug; it's a signal. The signal has a name: borrowed certainty."

AI often supplies rapid, polished answers that create immediate confidence without requiring personal effort or struggle. Such confidence, termed 'borrowed certainty', feels real but originates externally and diminishes a person's sense of authorship. When effort disconnects from certainty, individual judgment drifts out of calibration and ownership erodes over time. Traditional concerns about AI focus on accuracy, hallucinations, and bias, but a more personal harm is the effect on learners' and users' relationship to ideas. Sustained reliance on externally generated certainty can weaken skill development, reduce accountability, and obscure the responsibility for choices informed by AI.

Read at Psychology Today

Unable to calculate read time

Collection

[

|

...

]