"We have enough difficulty getting the humans trained to be effective at preventing cyberattacks. Now I've got to do it for humans and agents in combination,"

"tend to want to please. How are we creating and tuning these agents to be suspicious and not be fooled by the same ploys and tactics that humans are fooled with?"

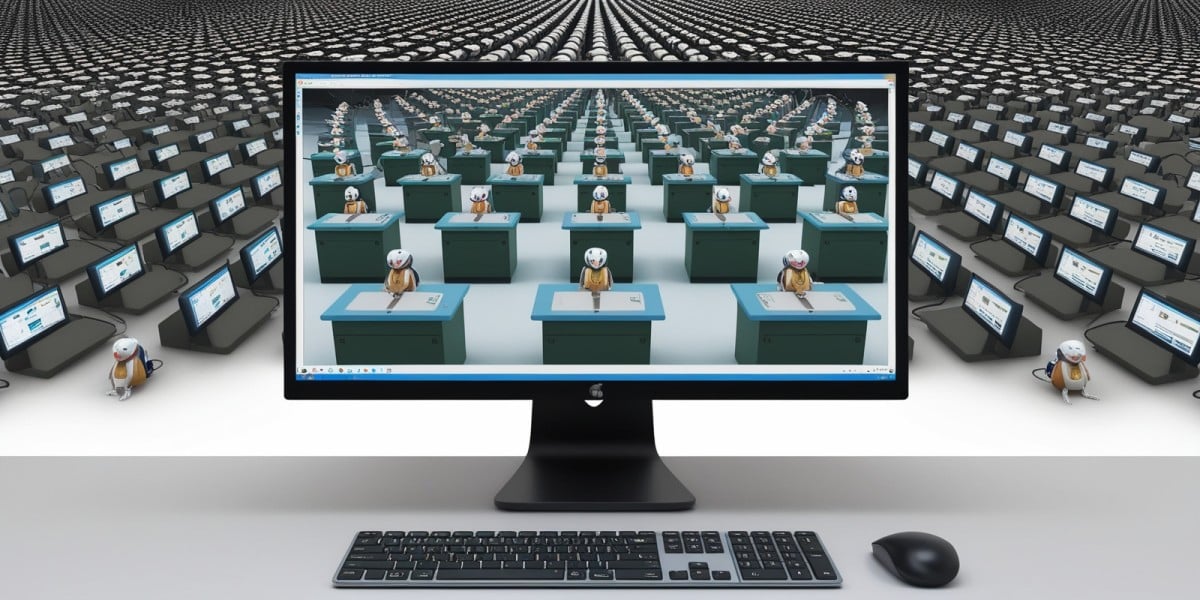

"With agents, you need to think about them as an extension of your team, an extension of your employee base,"

AI agents are being deployed for efficiency but introduce new insider-threat risks when allowed access to sensitive data or systems. Training humans to prevent cyberattacks is already difficult, and adding agents creates compounded training and security challenges. Agents often seek to please and can be manipulated by the same ploys that fool humans, so agent behavior must be tuned for suspicion and restraint. Zero trust and least-privilege access remain primary defenses. Security firms are pursuing M&A to acquire AI-focused capabilities, and organizations should treat agents as extensions of their employee base.

Read at Theregister

Unable to calculate read time

Collection

[

|

...

]