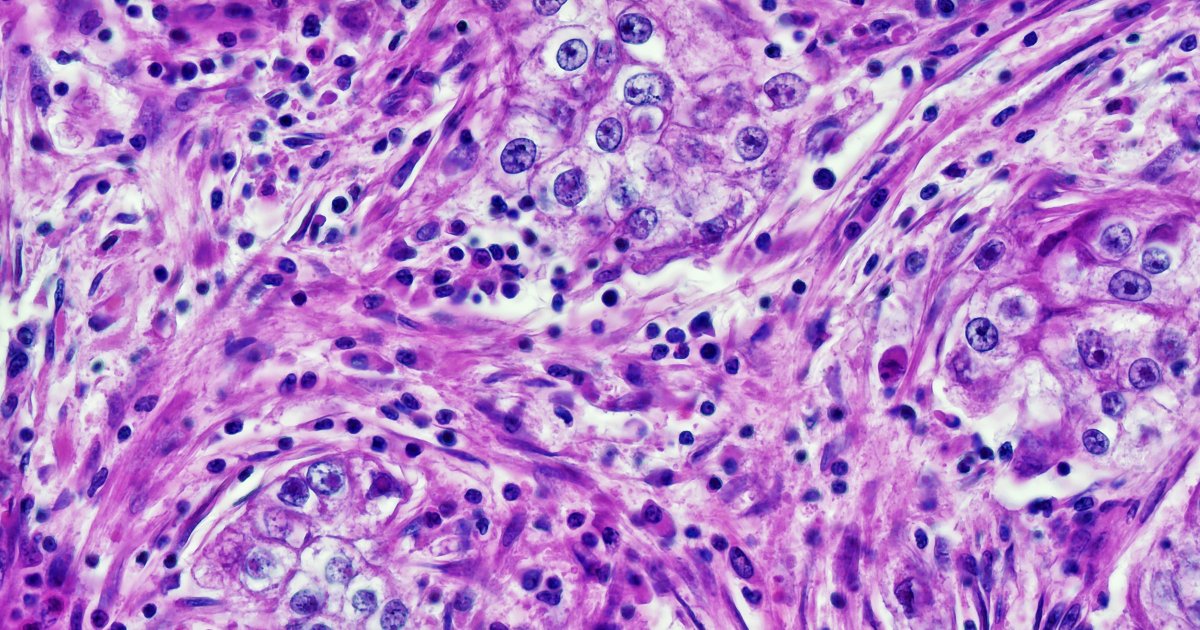

"The alarming findings were published in the journal Cell Reports Medicine, showing that four leading AI-enhanced pathology diagnostic systems differ in accuracy depending on patients ' age, gender, and race - demographic data, disturbingly, that the AI is extracting directly from pathology slides, a feat that's impossible for human doctors. To conduct the study, researchers at Harvard University combed through nearly 29,000 cancer pathology images from some 14,400 cancer patients. Their analysis found that the deep learning models exhibited alarming biases 29.3 percent of the time - on nearly a third of all the diagnostic tasks they were assigned, in other words."

""We found that because AI is so powerful, it can differentiate many obscure biological signals that cannot be detected by standard human evaluation," Harvard researcher Kun-Hsing Yu, a senior author of the study, said in a press release. "Reading demographics from a pathology slide is thought of as a 'mission impossible' for a human pathologist, so the bias in pathology AI was a surprise to us.""

"The AI tools were able to identify samples taken specifically from Black people, to give a concrete example. These cancer slides, the authors wrote, contained higher counts of abnormal, neoplastic cells, and lower counts of supportive elements than those from white patients, allowing AI to snuff them out, even though the samples were anonymous."

Four leading AI-enhanced pathology systems show demographic-dependent differences in diagnostic accuracy by extracting age, gender, and race from pathology slides. Nearly 29,000 cancer pathology images from about 14,400 patients were analyzed, and deep learning models exhibited biases in 29.3% of diagnostic tasks. AI can detect obscure biological signals undetectable to human pathologists, and models that latch onto age, race, or gender-related patterns shape tissue analysis and replicate biases from gaps in training data. Examples include identification of slides from Black patients with higher neoplastic cell counts and fewer supportive elements, producing unequal diagnostic outcomes and safety concerns.

Read at Futurism

Unable to calculate read time

Collection

[

|

...

]