"Big AI companies like OpenAI and Anthropic like to talk about their bold quest for AGI, or artificial general intelligence. The definition of that grail has proved to be somewhat flexible, but in general it refers to AI systems that are as smart as human beings at a wide array of tasks. AI companies have used this "quest" narrative to win investment, fascinate the tech press, and charm policymakers."

""There are tasks where many other animals are better than we are," LeCun said on a recent Information Bottleneck webcast. "We think of ourselves as being general, but it's simply an illusion because all of the problems that we can apprehend are the ones that we can think of-and vice versa," LeCun said. "So we're general in all of the problems that we can imagine, but there's a lot of pro"

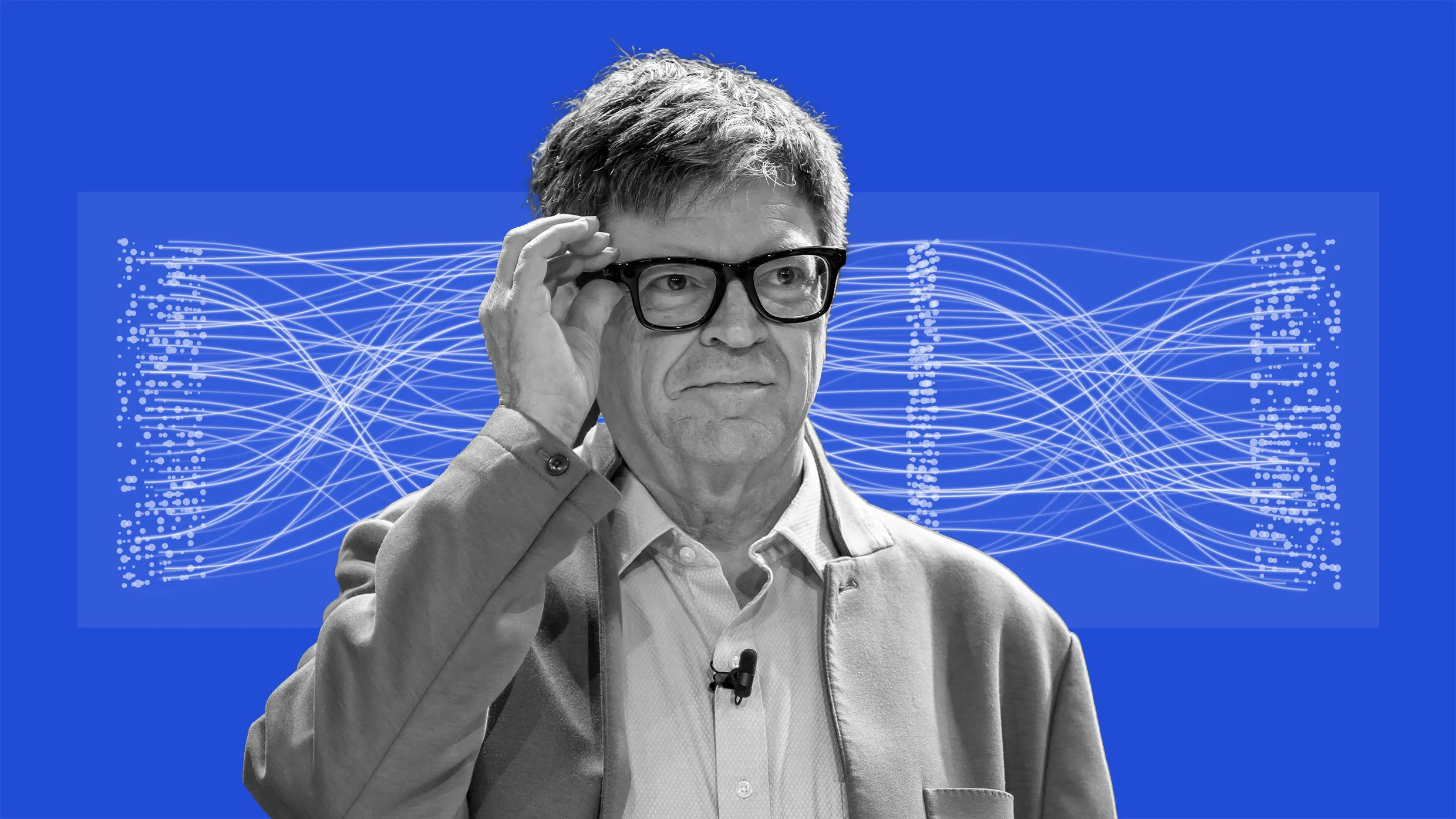

Yann LeCun rejects the idea of artificial general intelligence, arguing humans are not true generalists because abilities vary across tasks. Big AI firms promote an AGI narrative to attract investment, media attention, and influence policymakers. LeCun notes humans excel at specific physical and social tasks but underperform compared with machines in chess and arithmetic, and many animals outperform humans in some domains. The industry should prioritize narrower, achievable AI capabilities over chasing a flexible, ill-defined AGI goal. Databricks secured over $4 billion in new funding, and Google released the Gemini 3 Flash model.

Read at Fast Company

Unable to calculate read time

Collection

[

|

...

]