"Compared to training, inference is much more sensitive to memory bandwidth. For each token (think words or punctuation) generated, the entirety of the model's active weights needs to be streamed from memory. Because of this, memory bandwidth puts an upper bound on how interactive - that's how many tokens per second per user - a system can generate. To address this, Maia 200 has been equipped with 216GB of high-speed memory spread across what appears to be six HBM3e stacks, good for a claimed 7TB/s of bandwidth."

"Microsoft is also keen to point out just how much more cost- and power-efficient Maia 200 is than competing accelerators. "Maia is 30 percent cheaper than any other AI silicon on the market today," Guthrie said in a promotional video. At 750 watts, the chip uses considerably less power than either Nvidia's chips, which can chew through more than 1,200 watts each. This is low enough that Microsoft says Maia can be deployed in either air- or liquid-cooled datacenters."

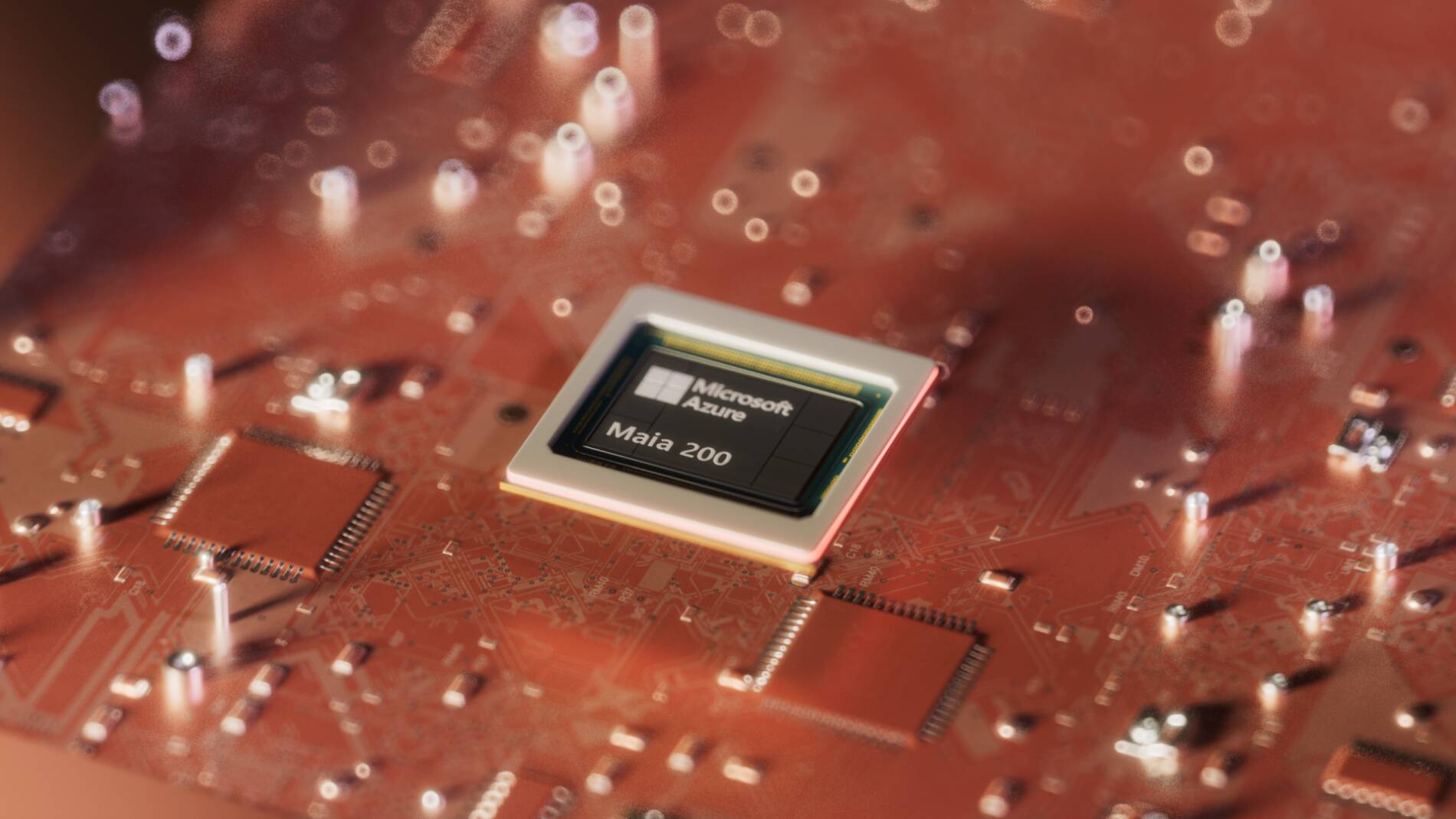

Microsoft introduced the Maia 200, a second-generation in-house AI accelerator fabricated on TSMC's N3 node with 144 billion transistors and 10 petaFLOPS of FP4 performance. The chip is specifically optimized for inferencing very large models, including both reasoning and chain of thought. Maia 200 provides 216GB of high-speed memory across six HBM3e stacks, delivering a claimed 7TB/s of bandwidth to mitigate inference memory streaming limits. The design emphasizes cost and power efficiency, claiming 30% lower silicon cost and consuming 750 watts versus competitors' higher power, enabling air- or liquid-cooled deployment. Maia 200 targets inference workloads rather than training.

Read at Theregister

Unable to calculate read time

Collection

[

|

...

]