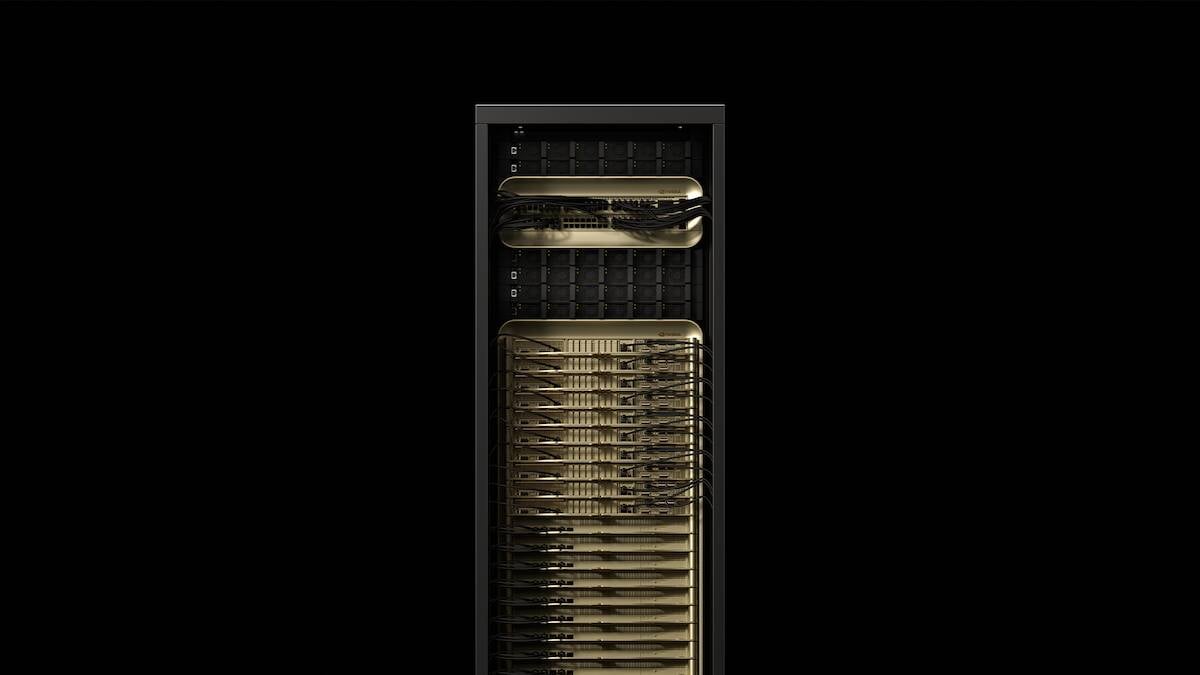

"CES used to be all about consumer electronics, TVs, smartphones, tablets, PCs, and - over the last few years - automobiles. Now, it's just another opportunity for Nvidia to peddle its AI hardware and software - in particular its next-gen Vera Rubin architecture. The AI arms dealer boasts that, compared to Blackwell, the chips will deliver up to 5x higher floating point performance for inference, 3.5x for training, along with 2.8x more memory bandwidth and an NvLink interconnect that's now twice as fast."

"Nvidia has also been teasing the Vera Rubin platform for nearly a year now, to the point where there's not much we didn't already know about the platform. But even though you won't be able to get your hands on Rubin for a few more months, it's never too early for a closer look at what the multi-million dollar machines will buy you."

Nvidia redirected CES attention toward its Vera Rubin AI architecture and associated hardware and software. Vera Rubin claims up to 5x higher floating-point inference performance and 3.5x higher training performance versus Blackwell, with 2.8x more memory bandwidth and a doubled NvLink interconnect speed. The chips remain slated for the second half of the year, matching prior Blackwell timelines. AMD's double-wide Helios racks aim to match NVL72-class performance while offering 50 percent more HBM4, creating competitive pressure. Nvidia refined the NVL72 rack for improved serviceability and telemetry, enabling switch-tray servicing without downtime and enhanced GPU health and RAS features.

Read at Theregister

Unable to calculate read time

Collection

[

|

...

]