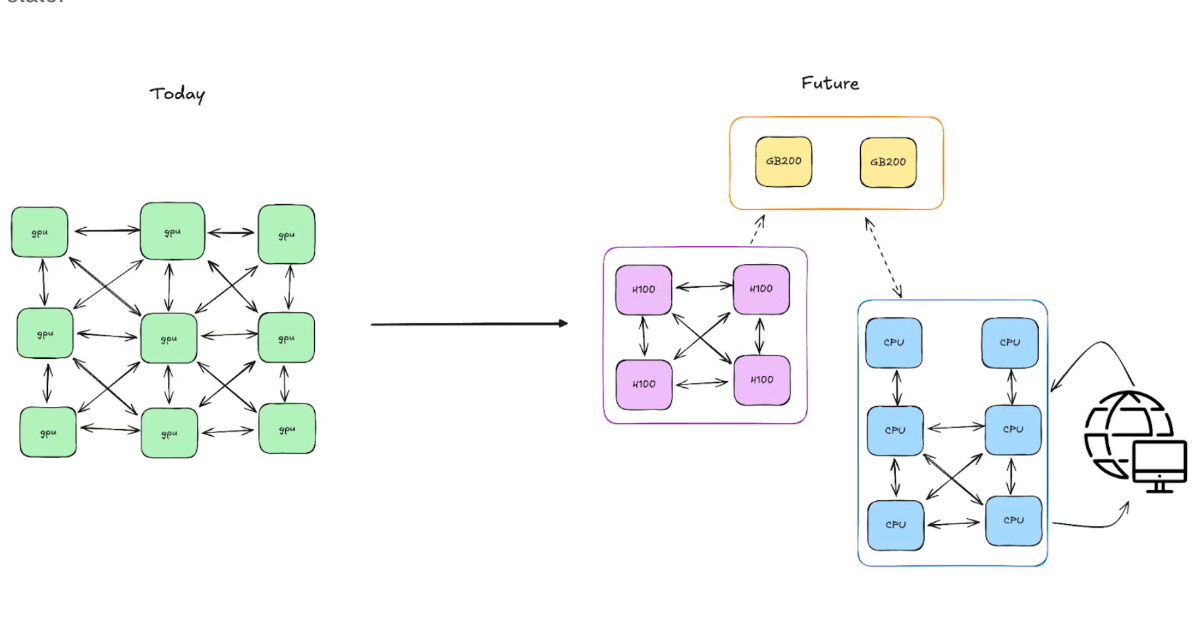

"Meta's PyTorch team has unveiled Monarch, an open-source framework designed to simplify distributed AI workflows across multiple GPUs and machines. The system introduces a single-controller model that allows one script to coordinate computation across an entire cluster, reducing the complexity of large-scale training and reinforcement learning tasks without changing how developers write standard PyTorch code. Monarch replaces the traditional multi-controller approach, in which multiple copies of the same script run independently across machines, with a single-controller model."

"At its core, Monarch introduces process meshes and actor meshes, scalable arrays of distributed resources that can be sliced and manipulated like tensors in NumPy. That means developers can broadcast tasks to multiple GPUs, split them into subgroups, or recover from node failures using intuitive Python code. Under the hood, Monarch separates control from data, allowing commands and large GPU-to-GPU transfers to move through distinct optimized channels for efficiency."

Monarch is an open-source framework that simplifies distributed AI workflows across multiple GPUs and machines. It replaces multi-controller setups with a single-controller model where one script orchestrates spawning GPU processes, handling failures, and coordinating computation across a cluster. Monarch exposes process meshes and actor meshes as scalable arrays of distributed resources that can be sliced and manipulated like NumPy tensors. The framework separates control from data so commands and large GPU-to-GPU transfers use distinct optimized channels. Distributed tensors integrate with PyTorch, and remote exceptions can be caught with standard Python try/except blocks to progressively add fault tolerance. The backend is implemented in Rust.

Read at InfoQ

Unable to calculate read time

Collection

[

|

...

]