"The commoditization of Optical Character Recognition (OCR) has historically been a race to the bottom on price, often at the expense of structural fidelity. However, the release of Mistral OCR 3 signals a distinct shift in the market. By claiming state-of-the-art accuracy on complex tables and handwriting-while undercutting AWS Textract and Google Document AI by significant margins-Mistral is positioning its proprietary model not just as a cheaper alternative, but as a technically superior parsing engine for RAG (Retrieval-Augmented Generation) pipelines."

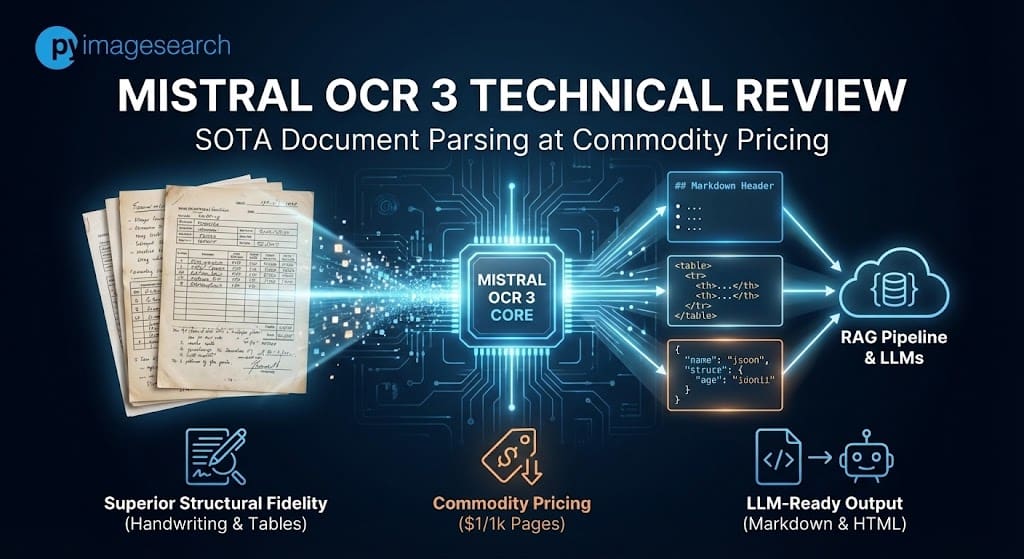

"Mistral OCR 3 is a proprietary, efficient model optimized specifically for converting document layouts into LLM-ready Markdown and HTML. Unlike general multimodal LLMs, it focuses on structure preservation-specifically specifically table reconstruction and dense form parsing-available via the mistral-ocr-2512 endpoint. While traditional OCR engines (like Tesseract or early AWS Textract iterations) focused primarily on bounding box coordinates and raw text extraction, Mistral OCR 3 is architected to solve the "structure loss" problem that plagues modern RAG pipelines."

"The model is described as "much smaller than most competitive solutions" [1], yet it outperforms larger vision-language models in specific density tasks. Its primary innovation lies in its output modality: rather than returning a JSON of coordinates (which requires post-processing to reconstruct), Mistral OCR 3 outputs Markdown enriched with HTML-based table reconstruction [1]. This implies the model is trained to recognize document semantics-identifying that a grid of numbers is a <table> with specific colspan and rowspan attributes-rather than just recognized isolated characters."

Mistral OCR 3 is a proprietary, efficient model optimized for converting document layouts into LLM-ready Markdown and HTML. The model prioritizes structure preservation, focusing on table reconstruction and dense form parsing via the mistral-ocr-2512 endpoint. It is much smaller than many competitors yet outperforms larger vision-language models on dense layout tasks. Instead of returning JSON coordinates, the model emits Markdown enriched with HTML table reconstruction, embedding semantics like colspan and rowspan to reduce post-processing. Claimed state-of-the-art accuracy on complex tables and handwriting and lower pricing position the model as a technically superior parsing engine for RAG pipelines.

Read at PyImageSearch

Unable to calculate read time

Collection

[

|

...

]