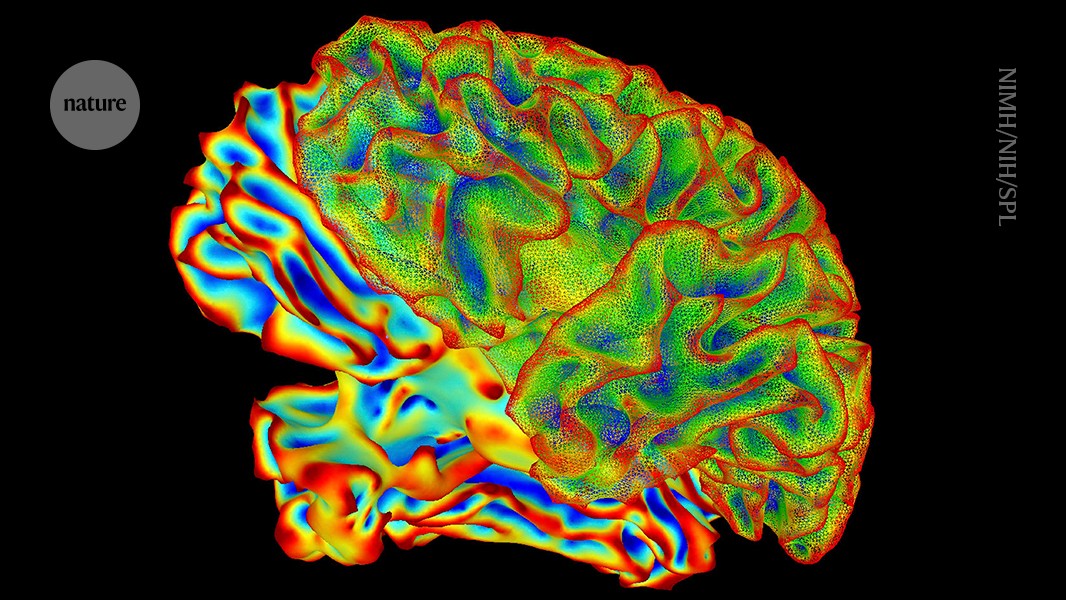

"Reading a person's mind using a recording of their brain activity sounds futuristic, but it's now one step closer to reality. A new technique called 'mind captioning' generates descriptive sentences of what a person is seeing or picturing in their mind using a read-out of their brain activity, with impressive accuracy. The technique, described in a paper published today in Science Advances, also offers clues for how the brain represents the world before thoughts are put into words."

"Previous attempts have identified only key words that describe what a person saw rather than the complete context, which might include the subject of a video and actions that occur in it, says Tomoyasu Horikawa, a computational neuroscientist at NTT Communication Science Laboratories in Kanagawa, Japan. Other attempts have used artificial intelligence (AI) models that can create sentence structure themselves, making it difficult to know whether the description was actually represented in the brain, he adds."

Mind captioning uses brain-activity recordings and deep-language models to generate descriptive sentences of viewed or imagined content. The approach converts text captions from over 2,000 videos into numerical meaning signatures, then trains a model on participants' brain scans to match activity patterns to those signatures. The system predicts visual content with notable detail, exceeding earlier methods that only extracted keywords or relied on AI-generated sentence structure. The technique reveals how the brain represents perceptual content before language encoding and offers potential applications for restoring communication in people with language impairments caused by stroke or other conditions.

Read at Nature

Unable to calculate read time

Collection

[

|

...

]