"The analysis reveals a long-tailed distribution of concept frequencies in pretraining datasets, with over two-thirds of concepts occurring at negligible frequencies relative to dataset size."

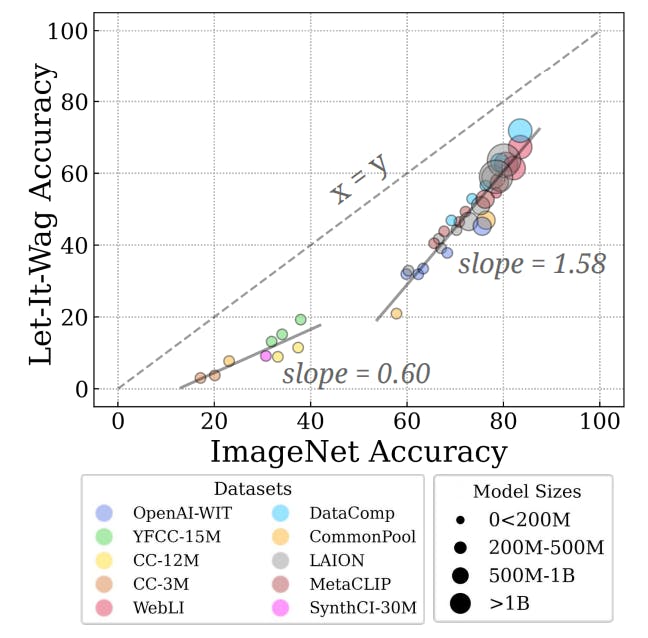

"Disparities in performance across various tasks are directly tied to the long-tailed distribution of concept frequencies observed in large-scale pretraining datasets."

Pretraining datasets display a long-tailed distribution of concepts, where a significant portion occurs at minimal frequencies. Two-thirds of concepts appear with negligible frequencies compared to dataset size. This phenomenon links directly to performance disparities seen across tasks, suggesting that the frequency of concepts influences model capabilities. The analysis reinforces prior findings regarding the long-tailed nature of large-scale language datasets. Understanding this distribution is crucial for effectively adapting models for various applications, ensuring adequate representation of rare concepts during pretraining to optimize downstream performance.

#pretraining #concept-frequency #performance-disparities #long-tailed-distribution #model-evaluation

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]