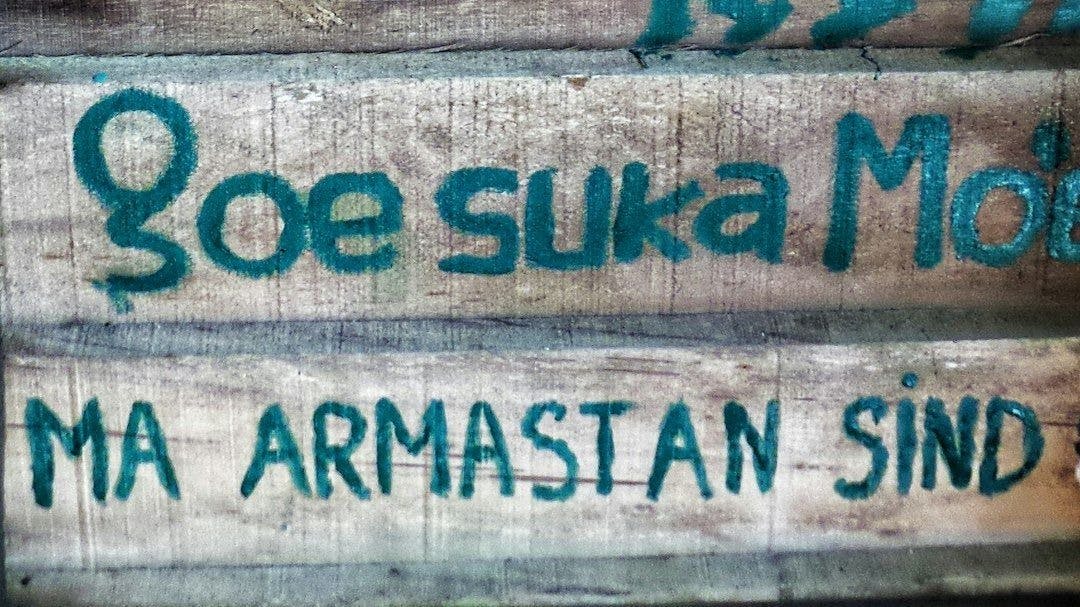

"The disconnect between tokenizer creation and model training allows certain inputs, termed 'glitch tokens,' to induce unwanted behavior in language models."

"This research highlights a lack of consistent methods to identify untrained and under-trained tokens prevalent in the tokenizer vocabulary of Large Language Models."

"Our comprehensive analysis presents effective methods based on tokenizer analysis and prompting techniques to automatically detect problematic tokens across various models."

"Findings indicate that improving the detection and handling of glitch tokens can enhance the safety and efficiency of Large Language Models."

The article explores the disconnect between tokenizer creation and model training in Large Language Models (LLMs), which enables the existence of 'glitch tokens' like _SolidGoldMagikarp. It emphasizes the need for consistent detection methods for under-trained tokens, presenting a comprehensive analysis that combines tokenizer analysis, model weight indicators, and prompting techniques. These methods show the prevalence of glitch tokens across various models and suggest pathways for improving model efficiency and safety through better identification and management of these problematic tokens.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]