"In statistics, linear regression is a statistical model that estimates the linear relationship between a scalar response and one or more explanatory variables."

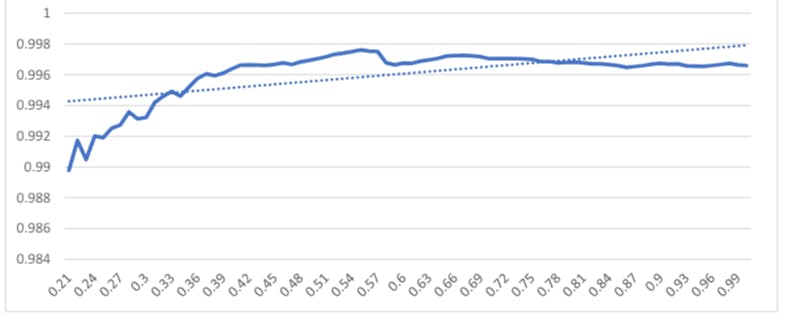

"The performance of each of the selected subsets was measured using a 10-fold cross-validation method, dividing the dataset randomly into 10 parts."

"Linear regression identifies the equation that produces the smallest difference between all observed values and their fitted values, finding the smallest sum of squared residuals."

"R-squared evaluates the scatter of the data points around the fitted regression line, providing insight into the model's explanatory power."

This article discusses the application of linear regression in statistical modeling, highlighting its functionality in estimating linear relationships between a dependent variable and explanatory variables. The authors detail a methodology involving a 10-fold cross-validation technique to measure the performance of selected variable subsets. They also explain the concept of errors-in-variables models for cases where explanatory variables contain measurement errors. Key metrics like the R-squared value are introduced to evaluate the model's effectiveness in capturing the data's variance, thus enhancing the reliability of predictive analytics.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]