"Feature selection aims to reduce the dimensionality of data, improving machine learning performance by selecting the most relevant variables from a large dataset."

"The feature selection process consists of four key steps: Subset Generation, Evaluation of Subset, Stopping Criteria, and Result Validation, essential for effective modeling."

"The choice of subset generation methods, whether forward, backward, or random, significantly impacts the trajectory and effectiveness of the feature selection process."

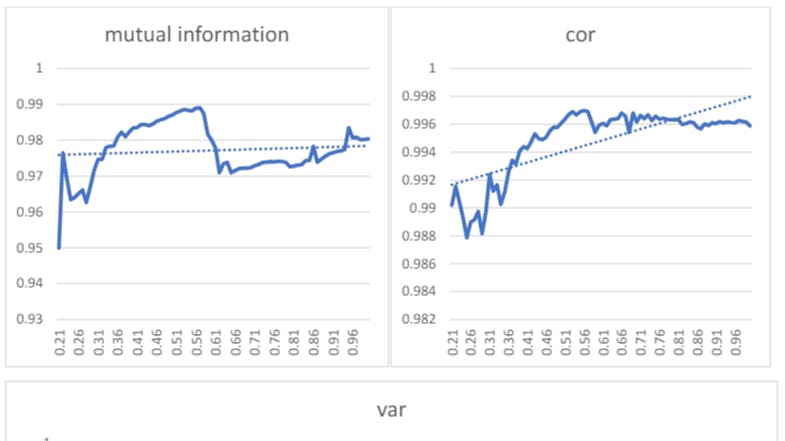

"A well-structured feature selection enhances the capabilities of machine learning algorithms by narrowing down dataset variables, ultimately leading to better performance evaluations."

Feature selection is crucial for enhancing machine learning performance by reducing dataset dimensionality. This article outlines the key steps involved in feature selection: Subset Generation, Evaluation of Subset, Stopping Criteria, and Result Validation. It emphasizes the importance of choosing effective subset generation methods, which can include forward or backward techniques, to ensure that the most relevant features are selected. Results indicate that applying these methods systematically enables better forecasting and performance evaluation, ultimately optimizing model outcomes and facilitating data analysis across various applications.

#feature-selection #machine-learning #data-dimensionality #subset-evaluation #performance-evaluation

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]