"The study emphasizes the importance of selecting feature selection techniques that exhibit low sensitivity to data size while ensuring high predictive performance, particularly in small datasets."

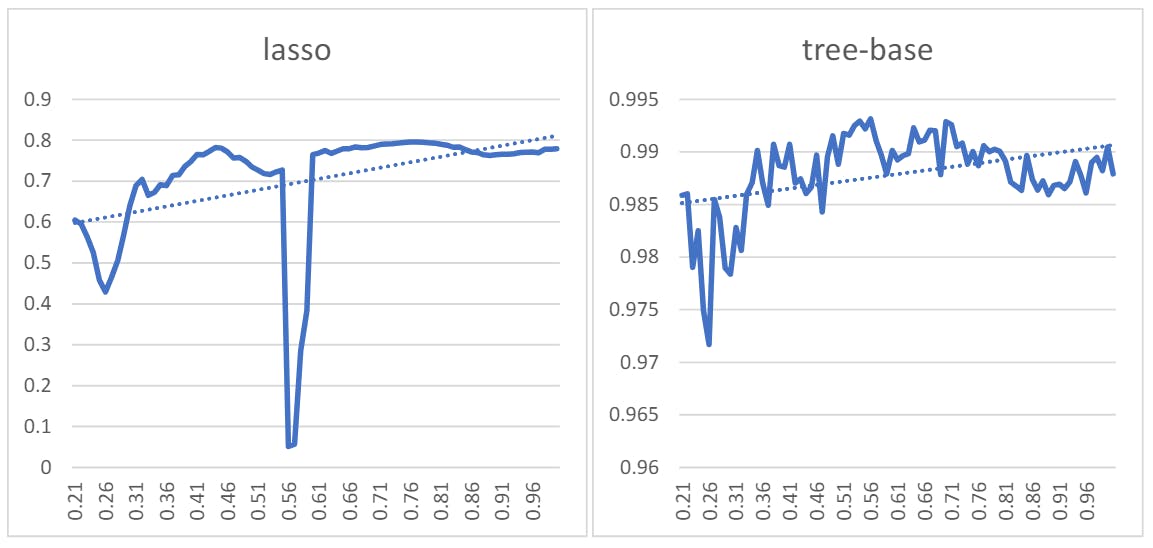

"Results indicate that standard feature selection methods exhibit fluctuating performance with varying data sizes. Variance and simulated methods are highlighted for their stability across data volumes."

"Hausdorff and edit distance methods perform competitively with standard feature selection methods, suggesting these similarity methods may offer a more reliable alternative in certain contexts."

"The research identifies three main evaluation criteria for selecting feature selection methods: r-squared value, sensitivity to sample size changes, and stability during sample size fluctuations."

The study investigates feature selection methods that minimize sensitivity to small dataset sizes while maintaining predictive accuracy. It evaluates various techniques based on their r-squared values, responsiveness to sample size changes, and stability across fluctuating data conditions. Notably, the variance and simulated methods showcased greater stability, while Hausdorff and edit distance emerged as strong alternatives. The findings highlight that while features selection methods show performance inconsistencies with differing data volumes, similarity methods present reduced volatility, enhancing the reliability of predictive models, although limitations regarding dataset variations are acknowledged in the study's conclusion.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]