"In training a language model, just like a child learning to speak, feedback shapes the understanding of appropriate and effective responses, guiding better communication."

"Reinforcement Learning from Human Feedback (RLHF) helps model learning by incorporating responses from peers and teachers, allowing for corrections and improving language use in debates."

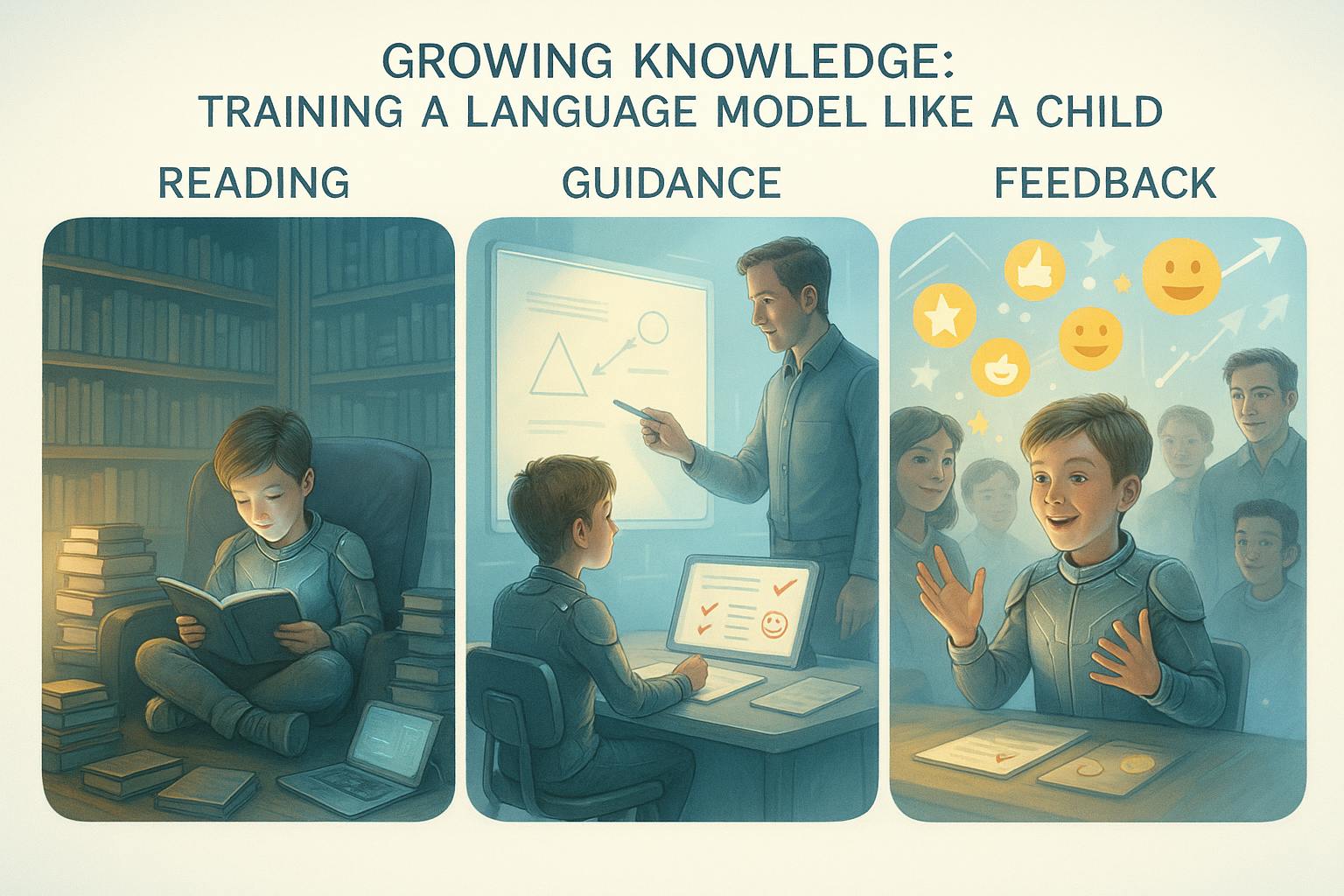

Training a large language model (LLM) mirrors the learning process of a child acquiring language. It consists of three primary stages: 1) Self-Supervised Pre-Training involves the model predicting the next word in sentences, akin to a child reading books to grasp context and grammar. 2) Supervised Fine-Tuning is where the model receives guidance on proper responses, correcting harmful outputs through example-driven learning. 3) Reinforcement Learning from Human Feedback allows for real-time feedback during interactions, refining the model's understanding of appropriate communication through social dynamics.

#language-learning #machine-learning #natural-language-processing #education #reinforcement-learning

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]