""What helped me was slowing down, stripping things back, and paying attention to what actually worked under load, not what looked clever on LinkedIn.""

""At first, everything feels stable: the agent responds, runs code, and behaves as expected. But the moment you swap the model, restart the system, or add a new interface, things break.""

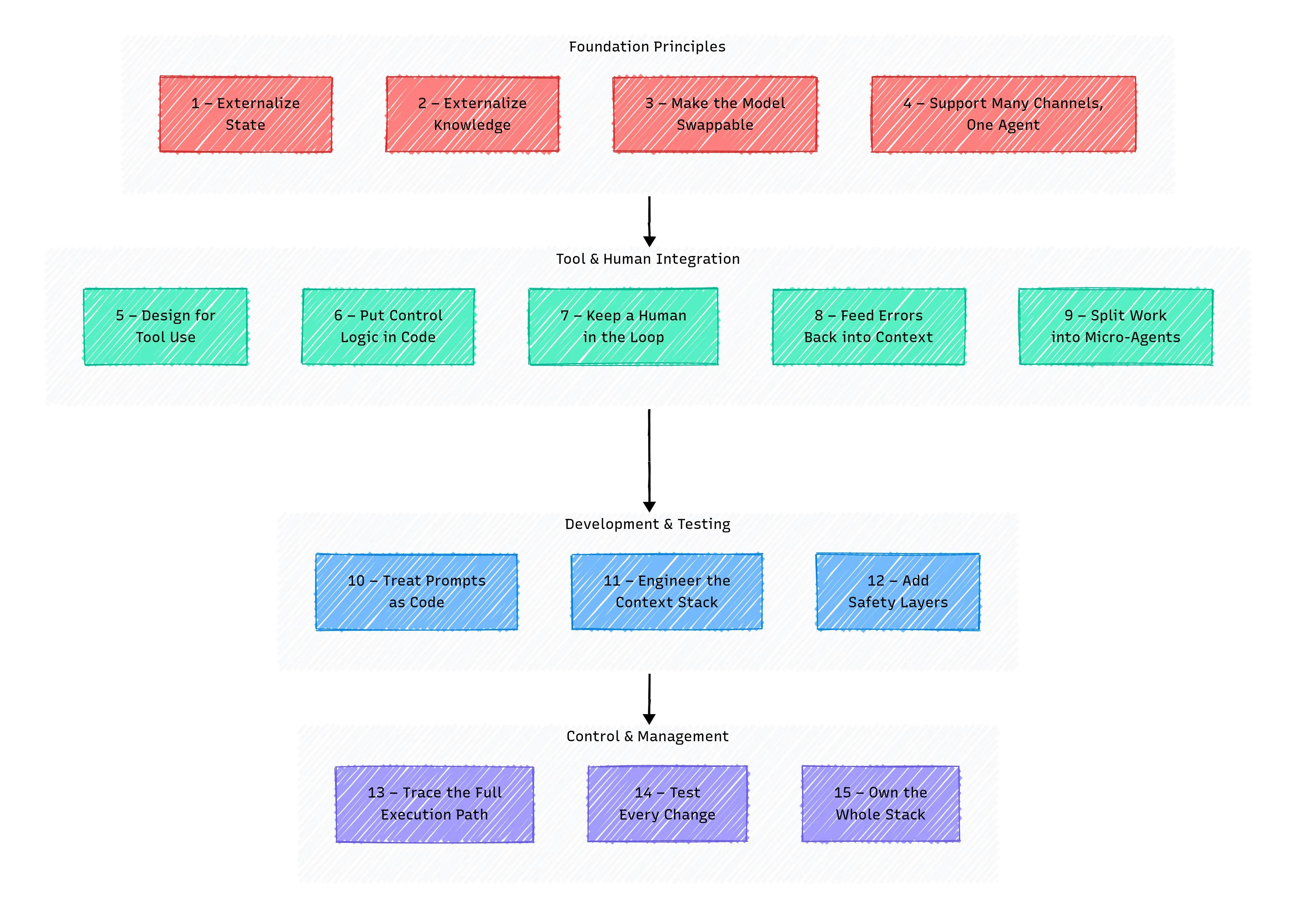

Effective AI system development necessitates moving beyond simple API calls and interactions. Early prototypes may seem successful but often fail under real-world conditions. Common issues arise from poor memory management, hardcoded values, and lack of session persistence. To build scalable and controllable AI systems, engineers should adhere to core principles that reinforce stability. Slow, deliberate development prioritizing practical functionality over theoretical elegance can lead to more robust AI agents, ultimately improving their reliability under varying operational loads.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]