"Existing methods for structured commonsense generation typically flatten the output graphs as strings, which often struggles with the generation of well-formed outputs."

"We address the problem of structured generation by translating the task into Python code, allowing for better representation and organization of commonsense reasoning."

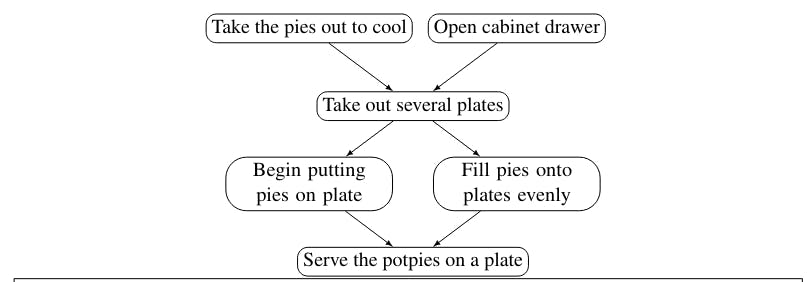

"Programs inherently encode rich structures and can efficiently represent task procedures, leveraging the control flows and nested functions inherent in programming."

"As code-generation models gain popularity, there is growing interest in adapting them for reasoning tasks, demonstrating their effectiveness beyond traditional procedural applications."

The article discusses a novel approach to structured commonsense reasoning using large code-generation models (Code-LLMs). Unlike traditional methods that produce flattened output graphs, this method translates reasoning tasks into Python code, enhancing the organization and generation of outputs. By leveraging inherent programming constructs like control flows and nested functions, the approach addresses the limitations of existing structured generation techniques. It evaluates the effectiveness of Code-LLMs in broader reasoning tasks, highlighting their adaptability and potential for advancing commonsense reasoning beyond simple procedural tasks.

#commonsense-reasoning #code-generation #machine-learning #natural-language-processing #artificial-intelligence

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]