"Low-resource languages like Lua offer unique challenges for code generation models, making them suitable test cases for evaluating performance and mitigating biases in instruction fine-tuning."

"Lua’s unconventional programming paradigms and less extensive training data could contribute to more pronounced performance degradation in quantized models compared to high-resource languages."

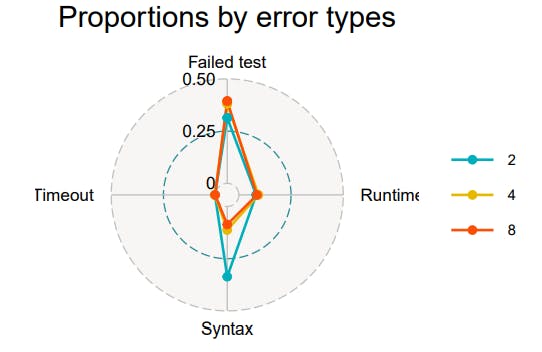

Lua is a low-resource scripting language commonly used in game engines and embedded devices. Its limited training data restricts language model performance, particularly in code generation. This article discusses how Lua serves as a good test case for evaluating quantized models, which tend to perform poorly in low-resource scenarios. Key benchmarks for Lua, derived from Python-based evaluations like HumanEval and MBPP, include 161 and 397 programming tasks respectively. Additionally, MCEVAL provides 50 language-specific tasks, with human-generated tasks reflecting Lua's unique programming characteristics. These factors inform the research question RQ2 regarding model efficacy.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]