"But the idea that new technology can decode a person's inner voice is "unsettling," says Nita Farahany, a professor of law and philosophy at Duke University and author of the book: The Battle for Your Brain. "The more we push this research forward, the more transparent our brains become," Farahany says, adding that measures to protect people's mental privacy are lagging behind technology that decodes signals in the brain."

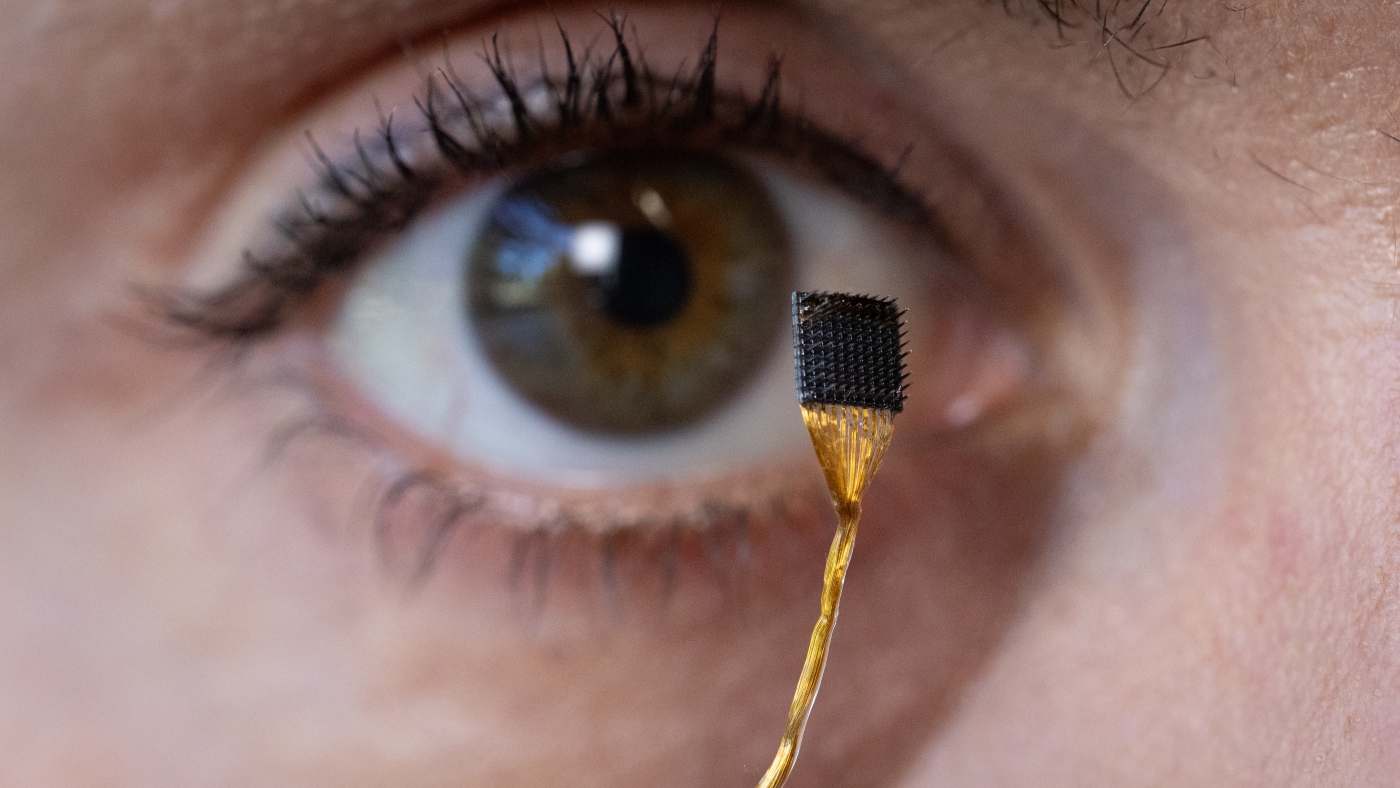

"From brain signal to speech BCI's are able to decode speech using tiny electrode arrays that monitor activity in the brain's motor cortex, which controls the muscles involved in speaking. Until now, those devices have relied on signals produced when a paralyzed person is actively trying to speak a word or sentence. "We're recording the signals as they're attempting to speak and translating those neural signals into the words that they're trying to say," says Erin Kunz."

Implanted brain-computer interfaces can decode inner speech as well as signals from attempted speech. Tiny electrode arrays placed over the motor cortex monitor activity that controls speaking muscles and can translate neural patterns into words. Prior devices decoded only signals generated when users actively attempted to speak, requiring effort and time and allowing users to withhold speech. Decoding imagined speech uses subtler neural signals and could enable paralyzed users to produce synthesized speech more quickly and with less fatigue. The capability to access inner monologue raises mental privacy concerns because protective measures lag behind advances in brain-signal decoding.

Read at www.npr.org

Unable to calculate read time

Collection

[

|

...

]