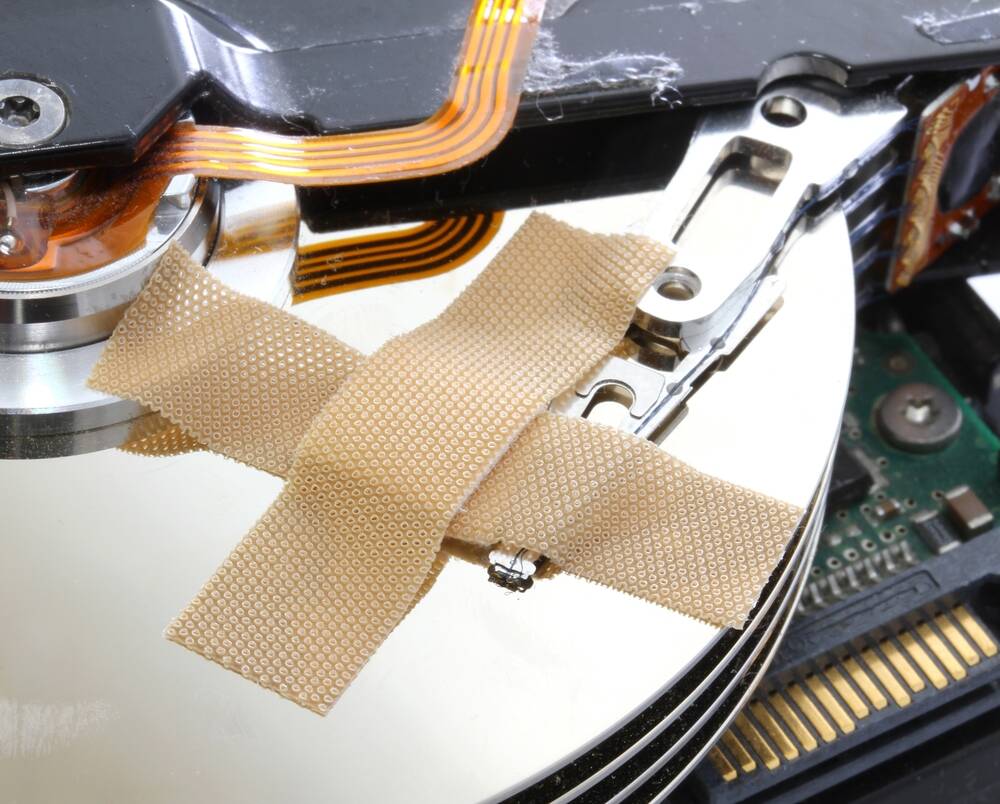

"A RAID failure has taken the Matrix.org homeserver offline, leaving users of the decentralized messaging service unable to send or receive messages while engineers attempt a 55 TB database restore. To be clear, those with their own homeservers, such as government organizations, are unaffected, but anyone using Matrix.org as their homeserver will have been hearing the sound of silence from the platform while the team works to bring the service back online."

"The team was understandably a little cautious when restoring the database and eventually reported: "We haven't been able to restore the DB primary filesystem to a state we're confident in running as a primary (especially given our experiences with slow-burning postgres db corruption)." The solution is a full 55 TB database snapshot restore followed by a replay of 17 hours' worth of traffic. At the time of writing, the team had managed to restore the snapshot and subsequent incremental backups and was about to embark on the traffic replay."

A RAID failure caused the secondary Matrix.org database to lose its filesystem at 1117 UTC on September 2, and the primary failed at 1726 UTC, taking the homeserver offline. The homeserver relies on a large PostgreSQL database that previously suffered long-developing index corruption in July, which caused room-join failures and message delivery problems. Engineers are performing a full 55 TB database snapshot restore and plan to replay about 17 hours of traffic; the snapshot and incremental backups have been restored and traffic replay was about to begin. Only users relying on Matrix.org homeserver are affected; self-hosted homeservers remain operational. The failure followed a routine storage upgrade exercise that went badly wrong.

Read at Theregister

Unable to calculate read time

Collection

[

|

...

]