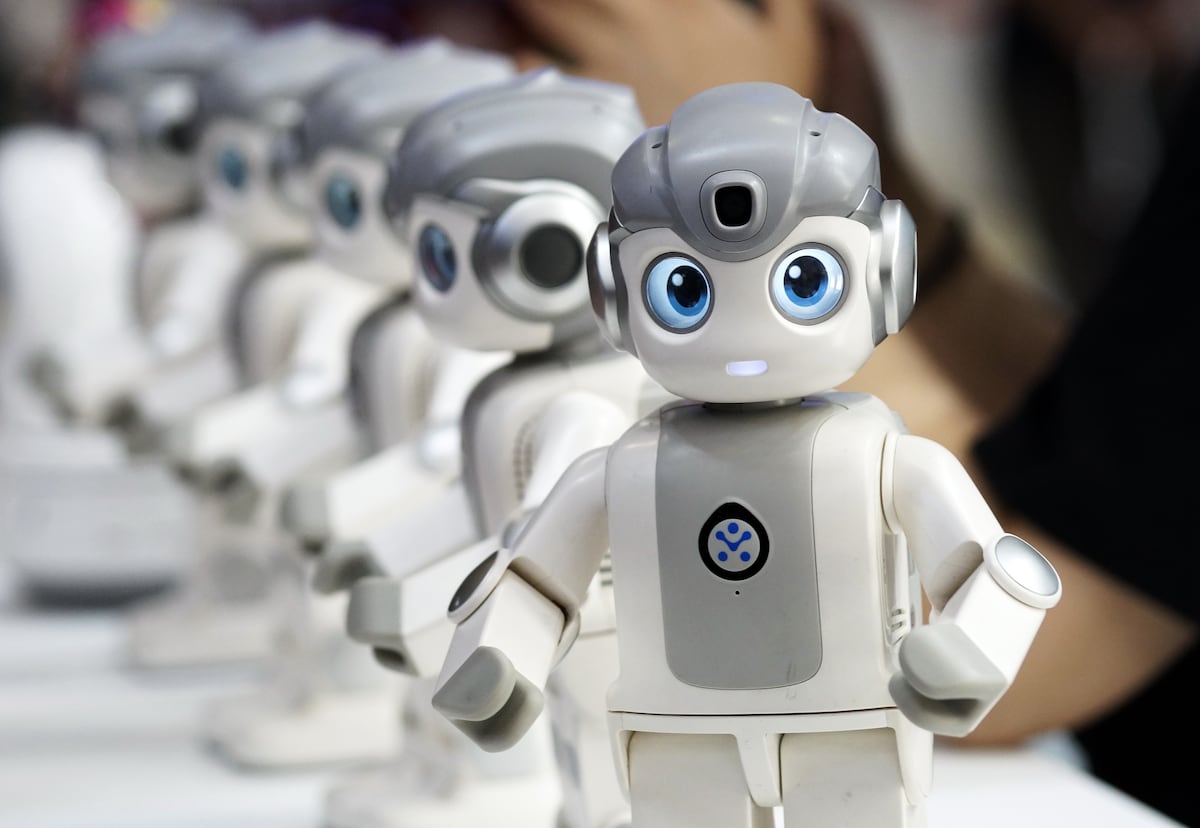

"The study found that when large language models are prompted to respond as humans would, they rate the emotions depicted in images similarly to human volunteers."

"Large language models correlate their emotional assessments with human ratings, signaling their ability to learn sophisticated emotional concepts through natural language."

"The research highlights that modern AI systems can learn representations of emotional concepts without explicit training, indicating a significant leap in capabilities."

"Evaluating the emotional responses of these models may align them with societal norms, potentially reducing risks associated with biased or inappropriate outputs."

Large language models, especially multimodal systems, can produce emotional evaluations of images that closely align with human judgments. A study involving ChatGPT-4o, Gemini Pro, and Claude Sonnet demonstrated the models' ability to understand and replicate human-like emotional responses when interpreting visual content. These advanced AI systems achieve this correlation through probabilistic modeling of language and images, suggesting they learn complex emotional concepts indirectly through training on diverse datasets. This ability emphasizes the need to investigate these models further to ensure their outputs match societal values, thereby mitigating bias risks.

Read at english.elpais.com

Unable to calculate read time

Collection

[

|

...

]