"CodeLlama 7B Instruct with 2-bit quantization achieved 31.6% pass@1 rate, while HumanEval benchmark showed 34.9% correct solutions from five models at 4-bit quantization."

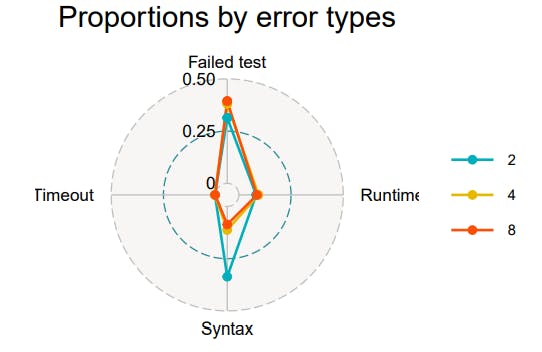

"The negative impact of quantization is evident, especially within the 2-bit models, where CodeGemma failed to solve any tasks, highlighting the model's limitations."

"Performance increases significantly from 2-bit to 4-bit quantization, but the improvement between 4-bit and 8-bit is marginal, suggesting less ability to leverage higher bits effectively."

"CodeQwen consistently ranks among the top models, showing a notable increase in performance with 8-bit quantization, outperforming others such as CodeLlama."

The evaluation involved five language models (LLMs) executing 10,944 tasks in Lua, measuring their pass@1 rates at varying quantization levels (2-bit, 4-bit, and 8-bit). The results indicated that most models scored below a 50% pass@1 rate, with CodeLlama and CodeGemma notably underperforming, especially at 2-bit quantization. Transitioning from 2-bit to 4-bit models led to significant performance improvements, although shifts to 8-bit yielded marginal gains. Noteworthy performance was observed in CodeQwen, which excelled with better quantization, reflecting variability in LLM effectiveness based on quantization strategies.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]