"In the era of AI-assisted development, the way we interact with code is fundamentally changing. More teams are building copilots, documentation assistants, or internal LLM agents that help developers with relevant queries."

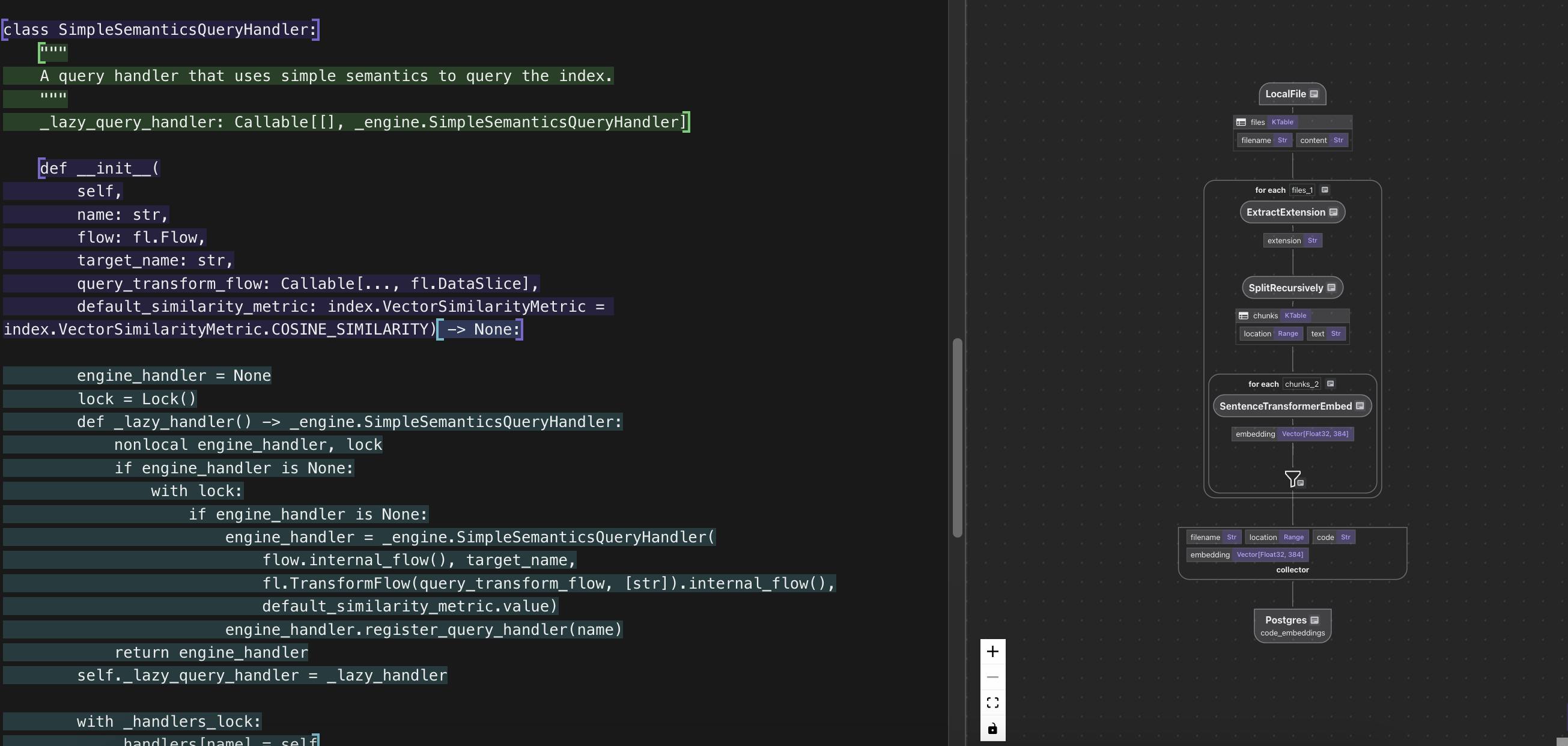

"LLMs have a limited context window, and dumping your entire repository into a prompt is inefficient. Indexing allows for chunking and structuring code for effective retrieval."

"AI agents require semantic search based on meaning, which necessitates embedding the codebase in a vector index for accurate context retrieval."

"A good index updates when the code changes, preventing LLMs from providing outdated answers. Real-time or incremental indexing is crucial for accuracy."

AI-assisted development is transforming code interaction with teams creating documentation assistants and LLM agents to assist developers with contextual queries. Large language models can struggle with individual codebases without tailored indexing. Effective indexing structures code for relevant retrieval during queries, enhancing the efficiency of AI in development. RAG enhances AI performance, relying on semantic search rather than traditional keyword matches. Regular updates to indexing are vital as software evolves, ensuring that LLMs provide up-to-date information rather than outdated responses, thereby optimizing developer workflows.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]