#uncertainty

#uncertainty

[ follow ]

#leadership #decision-making #anxiety #resilience #tariffs #mental-health #creativity #personal-growth #neuroscience

fromMedium

1 week agoEmbrace the mess: how to tell honest UX stories that help you grow

You just finished a design project. And it was a mess. Timelines constantly shifted. Stakeholders disagreed, going back and forth. You made calls without enough data to support them. Maybe the final design wasn't what you wanted. Now comes the hard part: thinking about how you're going to talk about it in your portfolio or case study. Most designers have one basic instinct in this scenario: clean it up. Tell the story as if there was no conflict, no missteps, and a smooth experience.

UX design

fromSilicon Canals

2 weeks agoRewatching the same show over and over is your brain's way of coping with this - Silicon Canals

Last week, I caught myself starting The Office for what must be the fifteenth time. My partner walked in, saw Jim pranking Dwight with the stapler in Jell-O, and just shook his head. "Again?" he asked. And honestly? I couldn't explain why I kept going back to the same show when there's literally endless content available at my fingertips. But here's the thing: I'm not alone in this.

Television

World politics

fromMedium

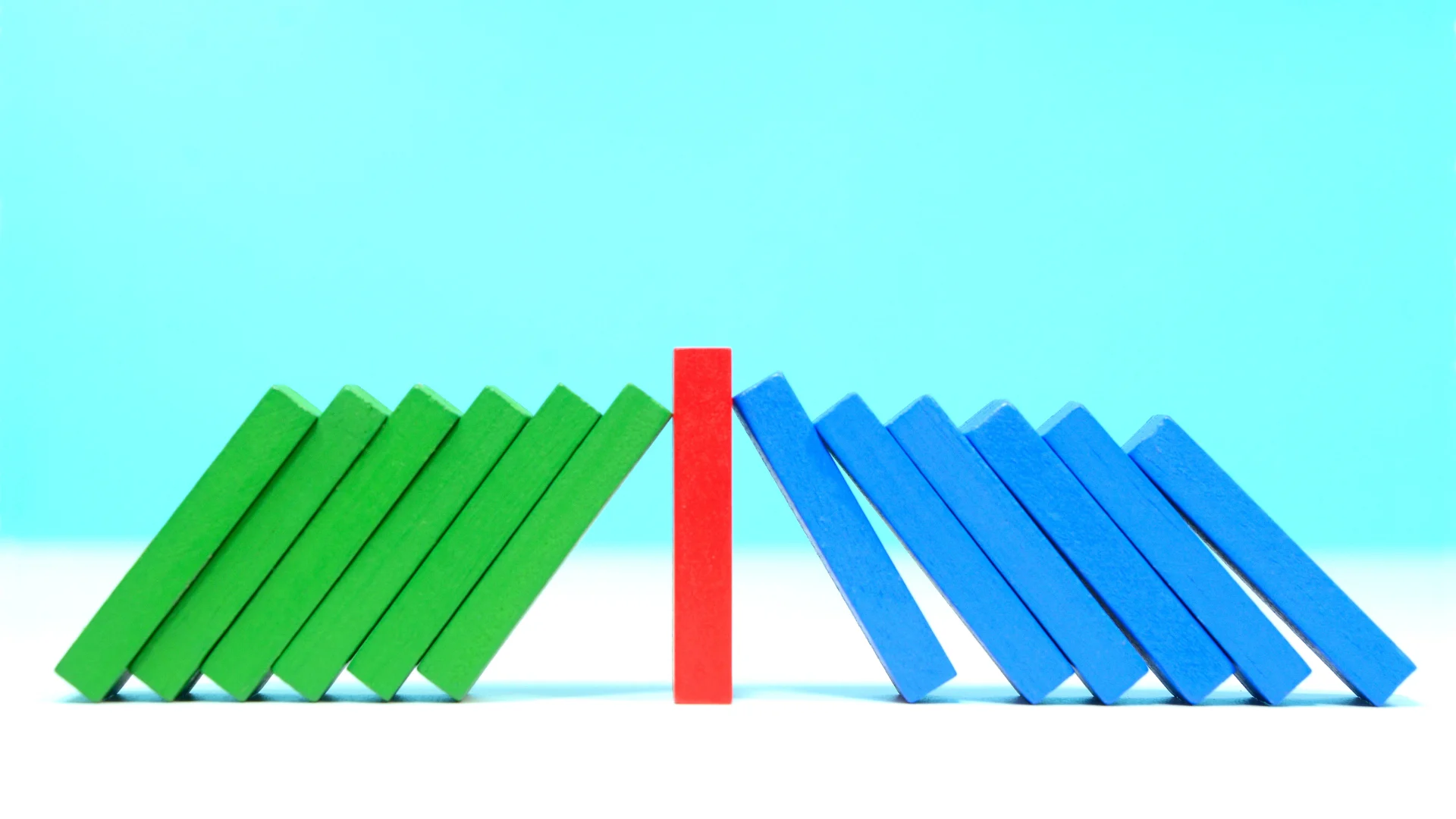

3 weeks agoBeyond the waterfall state: why missions need a different decision-making architecture

Government needs architectures that combine stewardship of stable systems with agile approaches enabling divergent creativity, collective judgement, and experimentation to manage uncertainty.

fromPsychology Today

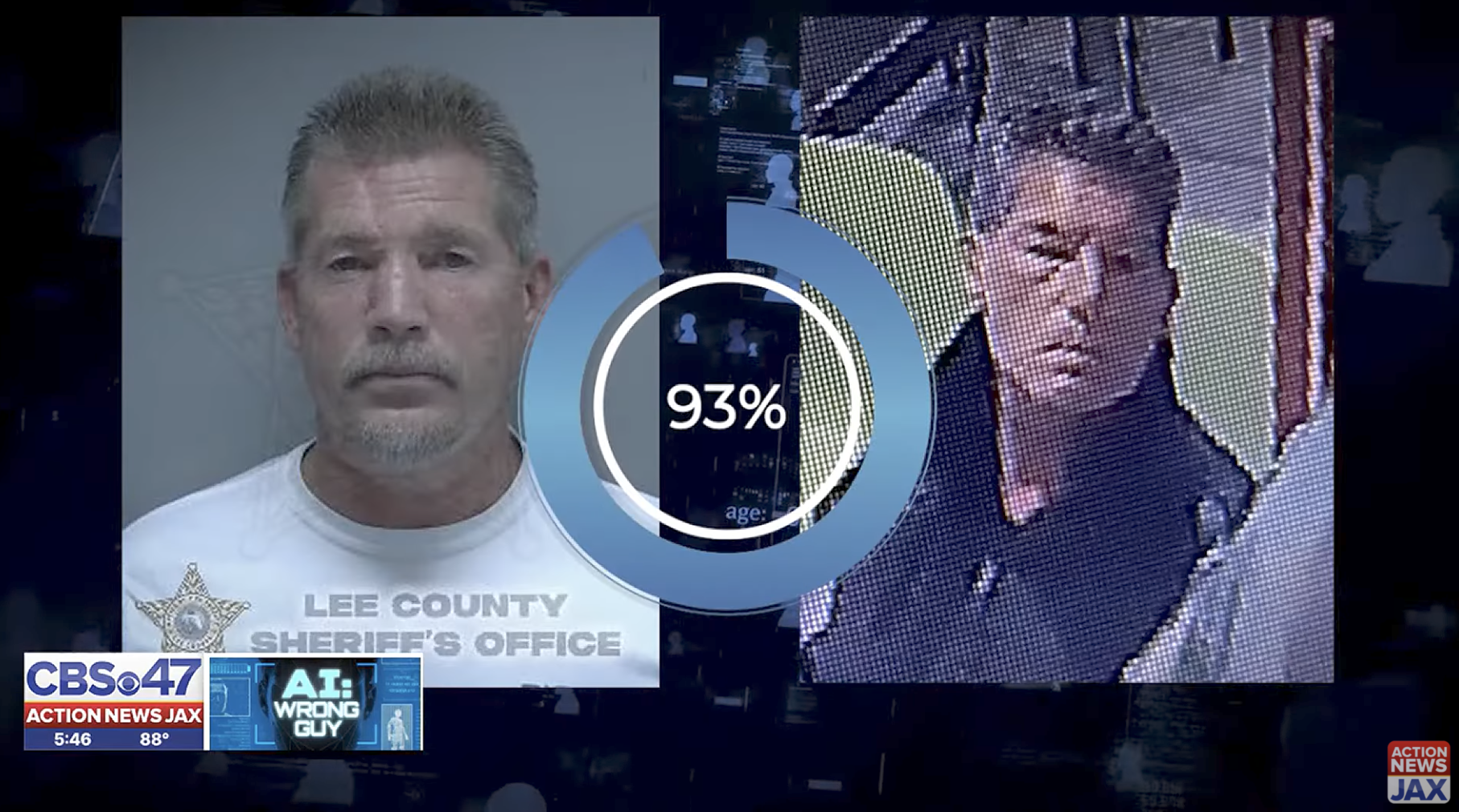

3 weeks agoThe Tragic Flaw in AI

One of the strangest things about large language models is not what they get wrong, but what they assume to be correct. LLMs behave as if every question already has an answer. It's as if reality itself is always a kind of crossword puzzle. The clues may be hard, the grid may be vast and complex, but the solution is presumed to exist. Somewhere, just waiting to be filled in.

Artificial intelligence

fromdesignboom | architecture & design magazine

1 month agoreflective red sphere suspends from century-old tree in gregory orekhov's land art installation

The red sphere, originally associated with the ritual of celebration and the expectation of magic, is stripped of its function and returned to the landscape as a heavy, vulnerable form without foundation. Suspended by a hemp rope from a bare century-old tree, the object exists between ground and space; neither in fall nor at rest, but in a prolonged state of uncertainty.

Arts

fromHarvard Business Review

1 month agoWhat Companies that Excel at Strategic Foresight Do Differently

At the start of a new year, it's human nature to want a crystal ball: What lies ahead, and how will it affect us? This feeling is particularly acute in times of uncertainty, when the ability to engage in meaningful foresight can feel elusive at best. And whether the world is objectively more volatile, it certainly feels that way to CEOs: Based on our analysis of earnings calls, 2025 saw a significant spike in discussions about uncertainty, with little sign of abating in 2026.

Business

fromFast Company

1 month agoThe psychology of the 'Chicken Little' coworker

Everybody knows this coworker-the one who spirals about cost-cutting layoffs when snacks vanish from the break room. The one who thinks they're getting fired because their boss hasn't been using emojis with them lately. The one who's the office Chicken Little: anxious, somewhat frantic, often misguided . . . and who can't stop talking to others about whatever it is they're anxious about.

Mental health

fromRoughMaps

1 month agoAre You Fit for the Digital Nomad Life? - RoughMaps

You've seen it all on your social media feed: people working from the beach, at a roadside hut, or on a train to another city, every day a different adventure. Digital nomads are rewriting what the typical nine-to-five looks like, and more than ever, you're convinced that it's the right path for you, too. After all, if remote work has become so normalized, why not take advantage of it? But the digital nomad lifestyle, glamorous as it may seem, might not be for everyone.

Digital life

fromPsychology Today

1 month ago3 Ways Improv Helps Build Leadership Skills

In making an idea together, you are trying to build a shared reality. We are both building a non-existent thing. Because improvisers are creating something out of nothing, they are forced to listen to each other, to pay attention, in a deeper way than in their ordinary lives. People can make assumptions and skim over details in their day-to-day lives, but while improvising, they have to catch every word and even catch details that go beyond their partner's words.

Mindfulness

Design

fromFortune

2 months agoDesigner Kevin Bethune: Bringing 'disparate disciplines around the table' is how leaders can 'problem solve the future' | Fortune

Nonlinear, multidisciplinary design and diverse collaboration are essential to navigate pervasive 2025 uncertainty across macroeconomic, geopolitical, policy, and technological domains.

fromwww.nature.com

2 months agoRetraction Note: The economic commitment of climate change

The authors have retracted this paper for the following reasons: post-publication, the results were found to be sensitive to the removal of one country, Uzbekistan, where inaccuracies were noted in the underlying economic data for the period 19951999. Furthermore, spatial auto-correlation was argued to be relevant for the uncertainty ranges. The authors corrected the data from Uzbekistan for 19951999 and controlled for data source transitions and higher-order trends as present in the Uzbekistan data.

fromenglish.elpais.com

2 months agoYou're not psychic, you just have anticipatory anxiety: Why it seems like you're able to predict horrible events

The figure of the witch has always been tied to the gift of seeing the future: the three witches from Macbeth, the powerful volvas (Viking witches), Galadriel the elf in Lord of the Rings, all endowed with a special intuition which in a society that is turning to esotericism on social media to deal with great uncertainty, as evidenced by accounts like @charcastrology and @horoscoponegro it is possible to start thinking you may have these powers as well.

Mental health

fromwww.theguardian.com

3 months agoWant to know everything? Perhaps it's best if you don't

If we want to build a better life, we have to be able to not know. Does that sound confusing? Perhaps you don't know what I'm talking about? Good! That's great practice. If you cannot tolerate not knowing, you run the risk of arranging your life so you can know everything (or at least try to), and you may end up sapping your existence of any spontaneity and joy.

Mindfulness

fromPsychology Today

3 months agoThe Wisdom of Temporal Perspectives in Decision-Making

The answer requires what I call wisdom of temporal perspectives in our decision-making. The wisdom of temporal perspectives involves the temporal appraisal of the current situation, where we take into consideration past factors that give rise to the situation and future consequences that may transpire when solving problems and making decisions. It is a form of transformational wisdom that is particularly important in a complex world of challenges today.

Philosophy

fromPsychology Today

4 months agoWhat Makes Us Scared?

For example, clowns are not supposed to be scary. They are funny-singing, dancing, joke-telling jesters. But, if we change the context of the clown and their purpose, then it's reasonable to understand why they're scary. My dislike predates the horror movie IT (1990), which is said to represent a landmark increase in coulrophobia ( fear of clowns) cases. I remember being around three or four

Film

fromHarvard Business Review

4 months agoHow Better Contracts Can Strengthen Strategic Partnerships

Writing a business contract is like predicting the future. It's a series of if/then statements - if this happens, then such-and-such party is responsible for that. The idea is that neither side really trusts the other. So a contract, backed up by the highest legal authority, gives a company something that it can put its trust in. That's why a firm's lawyers include every little thing they can think of.

Business

Artificial intelligence

fromHarvard Business Review

4 months agoHow to Lead When the Conditions for Success Suddenly Disappear

Workplaces face pervasive uncertainty from economic volatility, budget reallocations, rapid automation, and relentless productivity demands that strain resources and timelines.

fromPsychology Today

4 months agoWant to Prepare for the Future?

What might this be? Well, I don't have a complete answer. But I am convinced that the foundation is developing the ability to live with intention. Intention is nothing other than the ability to make free and conscious choices, and when we are able to do this consistently, we become the authors of our own stories, protagonists who co-create the world rather than being determined by it.

Philosophy

Real estate

fromLondon Business News | Londonlovesbusiness.com

4 months agoUncertainty derails $2.5 trillion in global construction activity projected for 2025 - London Business News | Londonlovesbusiness.com

Persistent uncertainty will erase $2.5 trillion from global construction activity this year, causing widespread descopes, delays, cancellations, and budget shortfalls.

fromPsychology Today

4 months agoSpiritual Strength Requires Wisdom

The main argument here is that spiritual strength is fundamentally about cultivating wisdom. From a psychological perspective, spirituality isn't about dogma or belief; it is about developing the kind of wisdom necessary to face suffering without denial, accept uncertainty without despair, and discover meaning beyond the ego. Modern cognitive scientists, such as John Vervaeke, describe wisdom in two dimensions: moral (what serves the greater good, the long view) and cognitive (navigating complexity, managing strong emotions, and distinguishing the essential from the trivial).

Mental health

fromTheregister

5 months agoOpenAI says models trained to make up answers

The admission came in a paper [PDF] published in early September, titled "Why Language Models Hallucinate," and penned by three OpenAI researchers and Santosh Vempala, a distinguished professor of computer science at Georgia Institute of Technology. It concludes that "the majority of mainstream evaluations reward hallucinatory behavior." Language models are primarily evaluated using exams that penalize uncertainty. The fundamental problem is that AI models are trained to reward guesswork, rather than the correct answer.

Artificial intelligence

fromPsychology Today

5 months agoWhy You Put Yourself Down When Waiting After a Job Interview

You feel like you've been on a roller coaster, constantly applying for jobs and searching for the perfect one. You've sent an updated resume and cover letter. You answered all the questions. You were asked to submit video answers to questions. And now you wait. Will you be called for an interview? After the tenth time of checking your email, the uncertainty in your head starts to take over, leading you to question yourself: "Why did I even try? I'm not as good as the other applicants. What makes me think they'd want me?"

Psychology

[ Load more ]